서비스 통신 확인

Socket-Based LoadBalancing

기존의 로드밸런싱은 DNAT 변환/역변환 과정이 있어 오버헤드가 발생하기에 이를 해결하기위해 소켓 기반 로드밸런싱이 나오게 되었습니다. POD가 목적지로 갈때 connect()을 이용해서 백엔드 주소로 설정하고 통신을 진행하기에 DNAT 변환/역변환이 필요없게 됩니다.

실습

서비스 배포

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Service

metadata:

name: svc

spec:

ports:

- name: svc-webport

port: 80

targetPort: 80

selector:

app: webpod

type: ClusterIP

EOF

KUBE-SVC 항목이 없다.

iptables-save | grep KUBE-SVC

서비스 주소 변수 지정 및 트래픽 발생

SVCIP=$(kubectl get svc svc -o jsonpath='{.spec.clusterIP}')

while true; do kubectl exec netpod -- curl -s $SVCIP | grep Hostname;echo "-----";sleep 1;done

패킷 확인

kubectl exec netpod -- tcpdump -enni any -q

---

14:22:15.931722 eth0 Out ifindex 10 f2:58:a2:c8:85:09 172.16.0.105.60938 > 172.16.1.158.80: tcp 0

14:22:15.932791 eth0 In ifindex 10 a6:76:c0:12:7c:06 172.16.1.158.80 > 172.16.0.105.60938: tcp 0

서비스 확인

c0 service list

---

ID Frontend Service Type Backend

...

10 10.10.133.140:80 ClusterIP 1 => 172.16.2.207:80 (active)

2 => 172.16.1.158:80 (active)

c0 bpf lb list | grep 10.10.133.140

---

10.10.133.140:80 (0) 0.0.0.0:0 (10) (0) [ClusterIP, non-routable]

10.10.133.140:80 (1) 172.16.2.207:80 (10) (1)

10.10.133.140:80 (2) 172.16.1.158:80 (10) (2)

---

Cilium 소캣 기반 설정 확인

c0 status --verbose

---

...

KubeProxyReplacement Details:

Status: True

Socket LB: Enabled

Socket LB Tracing: Enabled

Socket LB Coverage: Full

...

Services:

- ClusterIP: Enabled

- NodePort: Enabled (Range: 30000-32767)

- LoadBalancer: Enabled

- externalIPs: Enabled

- HostPort: Enabled

...

cgroup 확인

cilium config view | grep cgroup

---

cgroup-root /run/cilium/cgroupv2

mount-cgroup 확인

kubetail -n kube-system -c mount-cgroup --since 12h

---

[cilium-t6xjt] time="2024-10-26T22:47:46+09:00" level=info msg="Mounted cgroupv2 filesystem at /run/cilium/cgroupv2" subsys=cgroups

[cilium-mdsr2] time="2024-10-26T22:47:46+09:00" level=info msg="Mounted cgroupv2 filesystem at /run/cilium/cgroupv2" subsys=cgroups

[cilium-fctwq] time="2024-10-26T22:48:08+09:00" level=info msg="Mounted cgroupv2 filesystem at /run/cilium/cgroupv2" subsys=cgroups

POD 내 동작 확인

kubectl exec netpod -- strace -e trace=connect curl -s $SVCIP

---

connect(5, {sa_family=AF_INET, sin_port=htons(80), sin_addr=inet_addr("10.10.133.140")}, 16) = -1 EINPROGRESS (Operation in progress)

kubectl exec netpod -- strace -e trace=getsockname curl -s $SVCIP

---

getsockname(5, {sa_family=AF_INET, sin_port=htons(48144), sin_addr=inet_addr("172.16.0.105")}, [128 => 16]) = 0

getsockname(5, {sa_family=AF_INET, sin_port=htons(48144), sin_addr=inet_addr("172.16.0.105")}, [128 => 16]) = 0

getsockname(5, {sa_family=AF_INET, sin_port=htons(48144), sin_addr=inet_addr("172.16.0.105")}, [128 => 16]) = 0

getsockname(5, {sa_family=AF_INET, sin_port=htons(48144), sin_addr=inet_addr("172.16.0.105")}, [128 => 16]) = 0

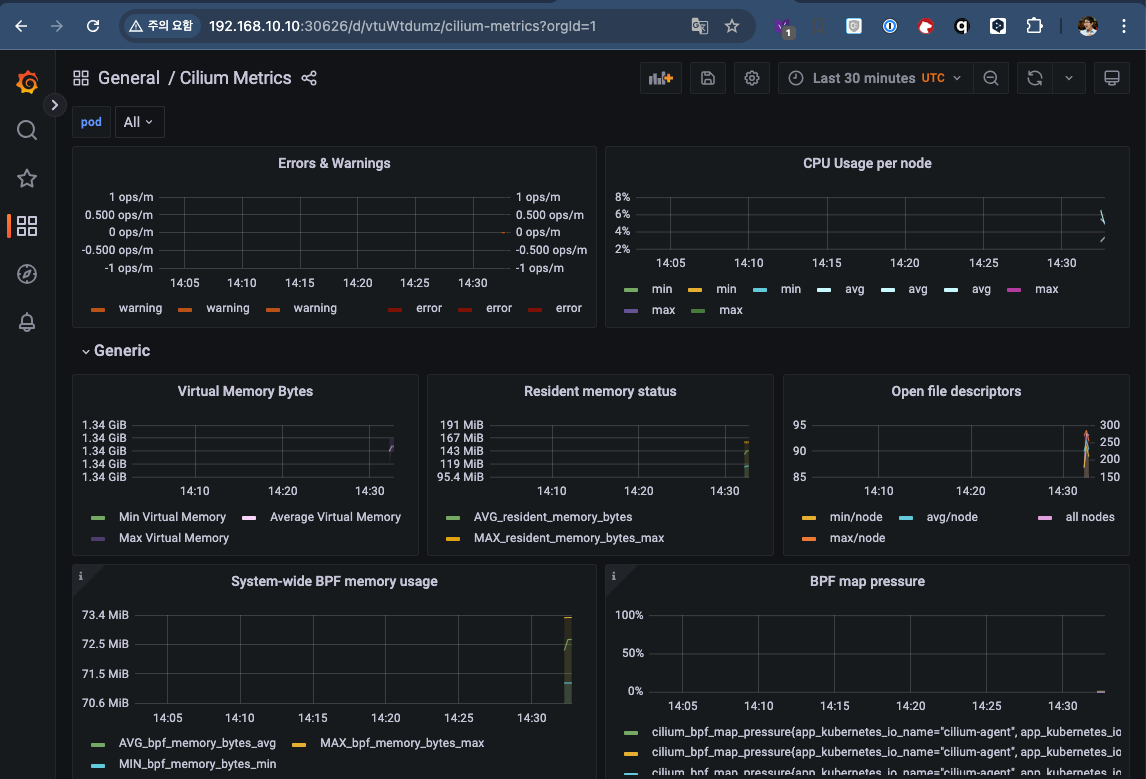

Running Prometheus & Grafana

배포

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.16.3/examples/kubernetes/addons/prometheus/monitoring-example.yaml

kubectl get all -n cilium-monitoring

파드와 서비스 확인

kubectl get pod,svc,ep -o wide -n cilium-monitoring

NodePort 설정

kubectl patch svc grafana -n cilium-monitoring -p '{"spec": {"type": "NodePort"}}'

kubectl patch svc prometheus -n cilium-monitoring -p '{"spec": {"type": "NodePort"}}'

Grafana 웹 접속

GPT=$(kubectl get svc -n cilium-monitoring grafana -o jsonpath={.spec.ports[0].nodePort})

echo -e "Grafana URL = http://$(curl -s ipinfo.io/ip):$GPT"

Prometheus 웹 접속 정보 확인

PPT=$(kubectl get svc -n cilium-monitoring prometheus -o jsonpath={.spec.ports[0].nodePort})

echo -e "Prometheus URL = http://$(curl -s ipinfo.io/ip):$PPT"

Direct Server Return(DSR)

로드밸런서 사용시 클라이언트에 대한 요청응답이 로드밸런서를 거치지 않고 바로 클라이언트에 전송하는 방식으로 로드밸런서의 부하를 낮출수 있습니다.

실습

dsr 설정

helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values --set loadBalancer.mode=dsr

설정 확인

cilium config view | grep dsr

---

bpf-lb-mode dsr

c0 status --verbose | grep 'KubeProxyReplacement Details:' -A7

---

KubeProxyReplacement Details:

Status: True

Socket LB: Enabled

Socket LB Tracing: Enabled

Socket LB Coverage: Full

Devices: ens5 192.168.10.10 fe80::10:54ff:fe61:2d31 (Direct Routing)

Mode: DSR

DSR Dispatch Mode: IP Option/Extension

파드/서비스 생성

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: netpod

labels:

app: netpod

spec:

nodeName: k8s-m

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Pod

metadata:

name: webpod1

labels:

app: webpod

spec:

nodeName: k8s-w1

containers:

- name: container

image: traefik/whoami

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Service

metadata:

name: svc1

spec:

ports:

- name: svc1-webport

port: 80

targetPort: 80

selector:

app: webpod

type: NodePort

EOF

SVCNPORT=$(kubectl get svc svc1 -o jsonpath='{.spec.ports[0].nodePort}')

while true; do curl -s k8s-s:$SVCNPORT | grep Hostname;echo "-----";sleep 1;done

c1 monitor -vv

------------------------------------------------------------------------------

Ethernet {Contents=[..14..] Payload=[..54..] SrcMAC=02:10:54:61:2d:31 DstMAC=02:f4:36:ac:e2:79 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..32..] Version=4 IHL=5 TOS=0 Length=52 Id=39220 Flags=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=10568 SrcIP=192.168.10.10 DstIP=172.16.1.133 Options=[] Padding=[]}

TCP {Contents=[..32..] Payload=[] SrcPort=50770 DstPort=80(http) Seq=1089375609 Ack=1881484550 DataOffset=8 FIN=true SYN=false RST=false PSH=false ACK=true URG=false ECE=false CWR=false NS=false Window=489 Checksum=30830 Urgent=0 Options=[TCPOption(NOP:), TCPOption(NOP:), TCPOption(Timestamps:619911905/2430833137 0x24f31ae190e391f1)] Padding=[]}

CPU 03: MARK 0xcec070bb FROM 598 to-network: 66 bytes (66 captured), state established, interface ens5, orig-ip 192.168.10.10

------------------------------------------------------------------------------

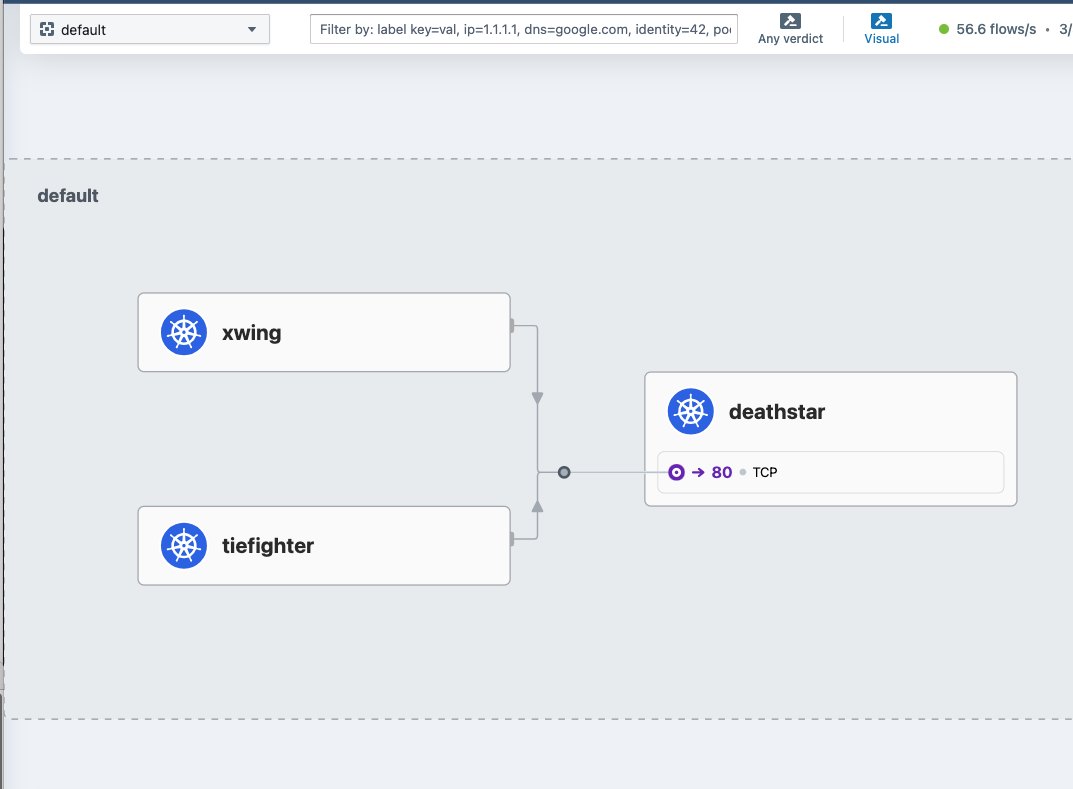

Network Policy(L3, L4, L7)

실습

kubectl create -f https://raw.githubusercontent.com/cilium/cilium/1.16.3/examples/minikube/http-sw-app.yaml

테스트

kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

---

Ship landed

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

---

Ship landed

Identity-Aware and HTTP-Aware Policy Enforcement Apply an L3/L4 Policy

- Cilium 에서는 Endpoint IP 대신, 파드의 **Labels(라벨)**을 사용(기준)하여 보안 정책을 적용합니다

- IP/Port 필터링을 L3/L4 네트워크 정책이라고 한다

- 아래 처럼 ‘org=empire’ Labels(라벨) 부착된 파드만 허용해보자

- Cilium performs stateful connection tracking 이므로 리턴 트래픽은 자동으로 허용됨

정책 배포

cat <<EOF | kubectl apply -f -

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L3-L4 policy to restrict deathstar access to empire ships only"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCP

EOF

kc describe cnp rule1

---

Name: rule1

Namespace: default

Labels: <none>

Annotations: <none>

API Version: cilium.io/v2

Kind: CiliumNetworkPolicy

Metadata:

Creation Timestamp: 2024-10-26T15:14:30Z

Generation: 1

Resource Version: 5329

UID: 946afc91-ccf4-4dcb-94f5-edf526a22b97

Spec:

Description: L3-L4 policy to restrict deathstar access to empire ships only

Endpoint Selector:

Match Labels:

Class: deathstar

Org: empire

Ingress:

From Endpoints:

Match Labels:

Org: empire

To Ports:

Ports:

Port: 80

Protocol: TCP

Status:

Conditions:

Last Transition Time: 2024-10-26T15:14:30Z

Message: Policy validation succeeded

Status: True

Type: Valid

Events: <none>

c0 policy get

---

[

{

"endpointSelector": {

"matchLabels": {

"any:class": "deathstar",

"any:org": "empire",

"k8s:io.kubernetes.pod.namespace": "default"

}

},

"ingress": [

{

"fromEndpoints": [

{

"matchLabels": {

"any:org": "empire",

"k8s:io.kubernetes.pod.namespace": "default"

}

}

],

"toPorts": [

{

"ports": [

{

"port": "80",

"protocol": "TCP"

}

]

}

]

}

],

"labels": [

{

"key": "io.cilium.k8s.policy.derived-from",

"value": "CiliumNetworkPolicy",

"source": "k8s"

},

{

"key": "io.cilium.k8s.policy.name",

"value": "rule1",

"source": "k8s"

},

{

"key": "io.cilium.k8s.policy.namespace",

"value": "default",

"source": "k8s"

},

{

"key": "io.cilium.k8s.policy.uid",

"value": "946afc91-ccf4-4dcb-94f5-edf526a22b97",

"source": "k8s"

}

],

"enableDefaultDeny": {

"ingress": true,

"egress": false

},

"description": "L3-L4 policy to restrict deathstar access to empire ships only"

}

]

Revision: 2

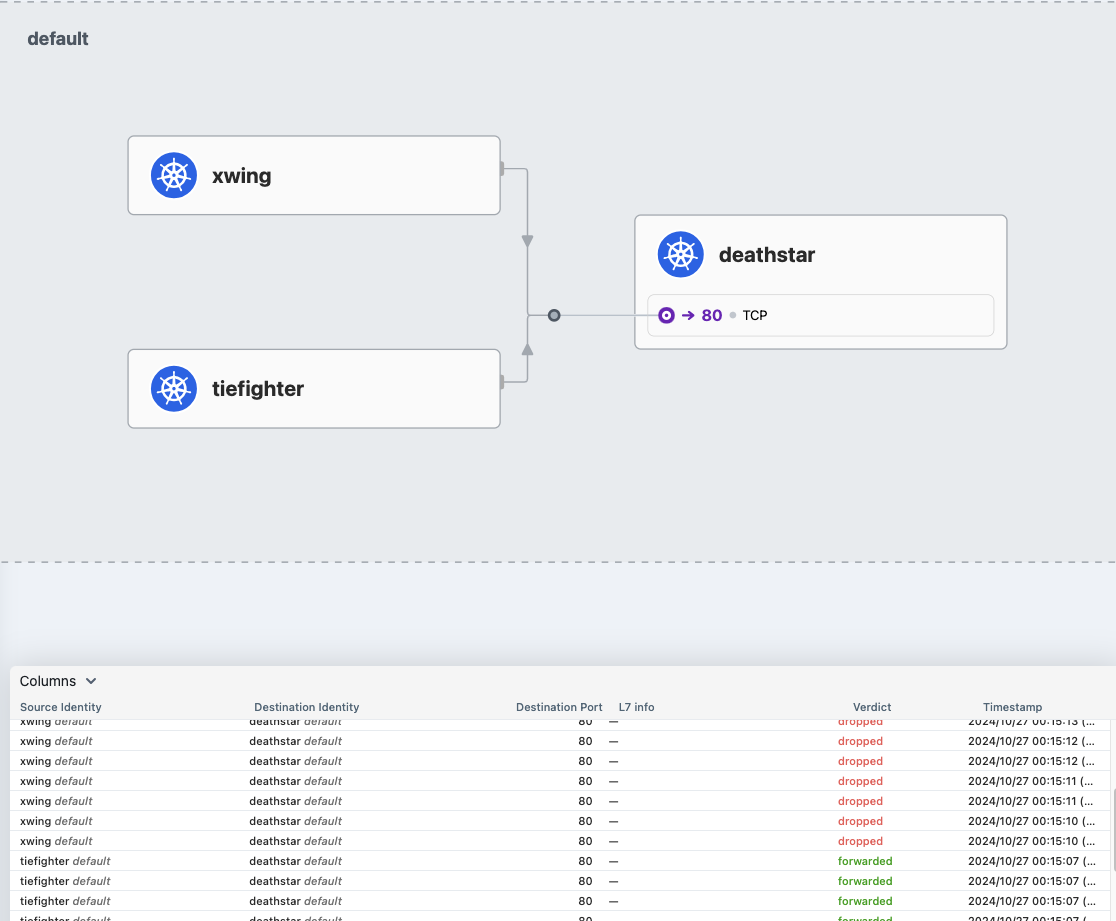

테스트

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

drop

hubble observe --pod deathstar --verdict DROPPED

---

...

Oct 26 15:15:46.416: default/xwing:49446 (ID:13058) <> default/deathstar-689f66b57d-kccrz:80 (ID:17839) Policy denied DROPPED (TCP Flags: SYN)

...

Identity-Aware and HTTP-Aware Policy Enforcement Apply and Test HTTP-aware L7 Policy

정책 업데이트

cat <<EOF | kubectl apply -f -

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L7 policy to restrict access to specific HTTP call"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCP

rules:

http:

- method: "POST"

path: "/v1/request-landing"

EOF

확인

kc describe ciliumnetworkpolicies

---

Name: rule1

Namespace: default

Labels: <none>

Annotations: <none>

API Version: cilium.io/v2

Kind: CiliumNetworkPolicy

Metadata:

Creation Timestamp: 2024-10-26T15:14:30Z

Generation: 2

Resource Version: 5893

UID: 946afc91-ccf4-4dcb-94f5-edf526a22b97

Spec:

Description: L7 policy to restrict access to specific HTTP call

Endpoint Selector:

Match Labels:

Class: deathstar

Org: empire

Ingress:

From Endpoints:

Match Labels:

Org: empire

To Ports:

Ports:

Port: 80

Protocol: TCP

Rules:

Http:

Method: POST

Path: /v1/request-landing

Status:

Conditions:

Last Transition Time: 2024-10-26T15:14:30Z

Message: Policy validation succeeded

Status: True

Type: Valid

Events: <none>

테스트

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

---

Ship landed

kubectl exec tiefighter -- curl -s -XPUT deathstar.default.svc.cluster.local/v1/exhaust-port

---

Access denied

hubble observe --pod deathstar --verdict DROPPED

---

Oct 26 15:19:47.742: default/tiefighter:49920 (ID:56650) -> default/deathstar-689f66b57d-t99c9:80 (ID:17839) http-request DROPPED (HTTP/1.1 PUT http://deathstar.default.svc.cluster.local/v1/exhaust-port)

hubble observe --pod deathstar --protocol http

---

Oct 26 15:19:43.822: default/tiefighter:45910 (ID:56650) -> default/deathstar-689f66b57d-kccrz:80 (ID:17839) http-request FORWARDED (HTTP/1.1 POST http://deathstar.default.svc.cluster.local/v1/request-landing)

Oct 26 15:19:43.823: default/tiefighter:45910 (ID:56650) <- default/deathstar-689f66b57d-kccrz:80 (ID:17839) http-response FORWARDED (HTTP/1.1 200 2ms (POST http://deathstar.default.svc.cluster.local/v1/request-landing))

Oct 26 15:19:47.742: default/tiefighter:49920 (ID:56650) -> default/deathstar-689f66b57d-t99c9:80 (ID:17839) http-request DROPPED (HTTP/1.1 PUT http://deathstar.default.svc.cluster.local/v1/exhaust-port)

Oct 26 15:19:47.743: default/tiefighter:49920 (ID:56650) <- default/deathstar-689f66b57d-t99c9:80 (ID:17839) http-response FORWARDED (HTTP/1.1 403 0ms (PUT http://deathstar.default.svc.cluster.local/v1/exhaust-port))

Bandwidth Manager

대여폭(Bandwidth)과 지연(Latency)에 대한 최적화를 담당한다.

- 개별 Pod의 TCP와 UDP 작업 부하를 최적화하고, 속도를 효율적으로 제한하는 방법을 설명합니다. EDT(Earliest Departure Time)와 eBPF를 사용해 이 기능을 구현한다.

kubernetes.io/egress-bandwidth네이티브 호스트 네트워크 장치에서 이그레스 대역폭을 제한하는 Pod 주석을 사용한다.- direct routing mode, tunneling mode 둘 다 지원한다.

- 제한 : L7 Cilium Network Policies

실습

설정 및 확인

tc qdisc show dev ens5

---

qdisc mq 0: root

qdisc fq_codel 0: parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

qdisc fq_codel 0: parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

qdisc fq_codel 0: parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

qdisc fq_codel 0: parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

설정

helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values --set bandwidthManager.enabled=true

적용 확인

cilium config view | grep bandwidth

---

enable-bandwidth-manager true

동작 인터페이스 확인

c0 status | grep BandwidthManager

---

BandwidthManager: EDT with BPF [CUBIC] [ens5]

옵션 추가 확인

tc qdisc show dev ens5

---

qdisc mq 8002: root

qdisc fq 8005: parent 8002:2 limit 10000p flow_limit 100p buckets 32768 orphan_mask 1023 quantum 18030b initial_quantum 90150b low_rate_threshold 550Kbit refill_delay 40ms timer_slack 10us horizon 2s horizon_drop

qdisc fq 8003: parent 8002:4 limit 10000p flow_limit 100p buckets 32768 orphan_mask 1023 quantum 18030b initial_quantum 90150b low_rate_threshold 550Kbit refill_delay 40ms timer_slack 10us horizon 2s horizon_drop

qdisc fq 8004: parent 8002:3 limit 10000p flow_limit 100p buckets 32768 orphan_mask 1023 quantum 18030b initial_quantum 90150b low_rate_threshold 550Kbit refill_delay 40ms timer_slack 10us horizon 2s horizon_drop

qdisc fq 8006: parent 8002:1 limit 10000p flow_limit 100p buckets 32768 orphan_mask 1023 quantum 18030b initial_quantum 90150b low_rate_threshold 550Kbit refill_delay 40ms timer_slack 10us horizon 2s horizon_drop

테스트

리소스 배포

cat <<EOF | kubectl apply -f -

---

apiVersion: v1

kind: Pod

metadata:

annotations:

# Limits egress bandwidth to 10Mbit/s.

kubernetes.io/egress-bandwidth: "10M"

labels:

# This pod will act as server.

app.kubernetes.io/name: netperf-server

name: netperf-server

spec:

containers:

- name: netperf

image: cilium/netperf

ports:

- containerPort: 12865

---

apiVersion: v1

kind: Pod

metadata:

This Pod will act as client.

name: netperf-client

spec:

affinity:

# Prevents the client from being scheduled to the

# same node as the server.

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- netperf-server

topologyKey: kubernetes.io/hostname

containers:

- name: netperf

args:

- sleep

- infinity

image: cilium/netperf

EOF

egress BW 제한 정보 확인

kubectl describe pod netperf-server | grep Annotations:

---

Annotations: kubernetes.io/egress-bandwidth: 10M

egress BW 제한이 설정된 파드가 있는 cilium pod 에서 제한 정보 확인

c1 bpf bandwidth list

---

IDENTITY EGRESS BANDWIDTH (BitsPerSec)

3548 10M

c1 endpoint list | grep 3548

---

3548 Disabled Disabled 57532 k8s:app.kubernetes.io/name=netperf-server 172.16.1.247 ready

트래픽 발생 -> 10M 제한

kubectl exec netperf-client -- netperf -t TCP_MAERTS -H "${NETPERF_SERVER_IP}"

---

MIGRATED TCP MAERTS TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 172.16.1.247 (172.16.) port 0 AF_INET

Recv Send Send

Socket Socket Message Elapsed

Size Size Size Time Throughput

bytes bytes bytes secs. 10^6bits/sec

131072 16384 16384 10.02 9.88

5M 제한

kubectl get pod netperf-server -o json | sed -e 's|10M|5M|g' | kubectl apply -f -

트래픽 발생

kubectl exec netperf-client -- netperf -t TCP_MAERTS -H "${NETPERF_SERVER_IP}"

---

MIGRATED TCP MAERTS TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 172.16.1.247 (172.16.) port 0 AF_INET

Recv Send Send

Socket Socket Message Elapsed

Size Size Size Time Throughput

bytes bytes bytes secs. 10^6bits/sec

131072 16384 16384 10.07 4.91

20 M 제한

kubectl get pod netperf-server -o json | sed -e 's|5M|20M|g' | kubectl apply -f -

트래픽 발생

kubectl exec netperf-client -- netperf -t TCP_MAERTS -H "${NETPERF_SERVER_IP}"

---

MIGRATED TCP MAERTS TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 172.16.1.247 (172.16.) port 0 AF_INET

Recv Send Send

Socket Socket Message Elapsed

Size Size Size Time Throughput

bytes bytes bytes secs. 10^6bits/sec

131072 16384 16384 10.01 19.80